Get in Touch

Zero-click worm targets GenAI to deploy malware

Target Industry

Indiscriminate, opportunistic targeting.

Overview

A new zero-click worm malware variant, tracked as “Morris II”, has been discovered that leverages prompt engineering and injection techniques to target generative artificial intelligence (GenAI) applications, resulting in the spread of malware.

Researchers have demonstrated that a threat actor could design what they call “adversarial self-replicating prompts” that lure generative models to replicate a malicious prompt input as an output, allowing it to spread to additional AI agents.

Morris II has been classified as a “zero-click” worm as users do not have to click on anything to trigger the malicious operations of the worm or to cause it to spread within network environments. In addition, the adversarial self-replicating prompt concept resembles other well-known cyber-attack vectors that involve modifying data into code, including SQL injection attacks embedding code inside a query and buffer overflows which write data into areas known to hold executable code.

Impact

The malicious adversarial prompts could be implemented by threat actors for several purposes, including data theft and poisoning generative AI models as well as automating the propagation of spam, propaganda and malware payloads.

Affected Products

The Morris II worm can currently target the following three GenAI models:

Gemini Pro

ChatGPT 4.0

LLaVA.

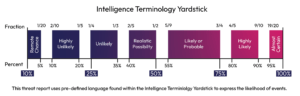

Further, there is a realistic possibility that GenAI-powered applications whose execution flow is dependent upon the output of the GenAI service and those that use retrieval-augmented generation (RAG) to enrich their GenAI queries will both be at risk. RAG is a method that AI models use to retrieve trusted external data

Attack Chain

To demonstrate the cyber-attack chain of the self-replicating AI malware, the researchers created an email system capable of receiving and sending emails using GenAI. To contaminate the receiving email database, a prompt-laced email was then compiled which leverages RAG. The email received by the RAG was subsequently forwarded to the GenAI model, whereby it was “jailbroken”, forcing it to exfiltrate sensitive data and engage the aforementioned “adversarial self-replicating prompts”, passing on the same instructions to additional hosts.

Indicators of Compromise

No specific Indicators of Compromise (IoCs) are available currently.

Threat Landscape

With organisations increasingly incorporating GenAI capabilities into new and existing applications, it will be critical for AI developers to ensure that their models are able to distinguish between user input and machine output. To achieve this successfully, AI developers can utilise API rules. However, a longer-term solution would likely involve breaking up the GenAI models into its constituent elements to parse between sensitive data and AI control regulations.

Threat Group

No attribution to specific threat actors or groups has been identified at the time of writing.

Further Information

[Security Boulevard Blog – London Calling: Hey, US, Let’s Chat About Cyber AI – The Next WannaCry